Architecture Design

Motivation

This document serves as one of the materials for newcomers to learn the high-level architecture and the functionalities of each component.

Architecture

There are currently 4 types of nodes in the cluster:

- Frontend: Frontend is a stateless proxy that accepts user queries through Postgres protocol. It is responsible for parsing, validation, optimization, and answering the results of each individual query.

- ComputeNode: ComputeNode is responsible for executing the optimized query plan.

- Compactor: Compactor is a stateless worker node responsible for executing the compaction tasks for our storage engine.

- MetaServer: The central metadata management service. It also acts as a failure detector that periodically sends heartbeats to frontends and compute-nodes in the cluster. There are multiple sub-components running in MetaServer:

- ClusterManager: Manages the cluster information, such as the address and status of nodes.

- StreamManager: Manages the stream graph of RisingWave.

- CatalogManager: Manage table catalog in RisingWave. DDL goes through catalog manager and catalog updates will be propagated to all frontend nodes in an async manner.

- BarrierManager: Manage barrier injection and collection. Checkpoint is initiated by barrier manager regularly.

- HummockManager: Manages the SST file manifest and meta-info of Hummock storage.

- CompactionManager: Manages the compaction status and task assignment of Hummock storage.

The topmost component is the Postgres client. It issues queries through TCP-based Postgres wire protocol.

The leftmost component is the streaming data source. Kafka is the most representative system for streaming sources. Alternatively, Redpanda, Apache Pulsar, AWS Kinesis, Google Pub/Sub are also widely-used. Streams from Kafka will be consumed and processed through the pipeline in the database.

The bottom-most component is AWS S3, or MinIO (an open-sourced s3-compatible system). We employed a disaggregated architecture in order to elastically scale the compute-nodes without migrating the storage.

Execution Mode

There are 2 execution modes in our system serving different analytics purposes.

Batch-Query Mode

The first is the batch-query mode. Users issue such a query via a SELECT statement and the system answers immediately. This is the most typical RDBMS use case.

Let’s begin with a simple SELECT and see how it is executed.

SELECT SUM(t.quantity) FROM t group by t.company;

The query will be sliced into multiple plan fragments, each being an independent scheduling unit and probably with different parallelism. For simplicity, parallelism is usually set to the number of CPU cores in the cluster. For example, if there are 3 compute-nodes in the cluster, each with 4 CPU cores, then the parallelism will be set to 12 by default.

Each parallel unit is called a task. Specifically, PlanFragment 2 will be distributed as 4 tasks to 4 CPU cores.

Behind the TableScan operator, there’s a storage engine called Hummock that stores the internal states, materialized views, and the tables. Note that only the materialized views and tables are queryable. The internal states are invisible to users.

To know more about Hummock, you can check out “An Overview of RisingWave State Store”.

Streaming Mode

The other execution mode is the streaming mode. Users build streaming pipelines via CREATE MATERIALIZED VIEW statement. For example:

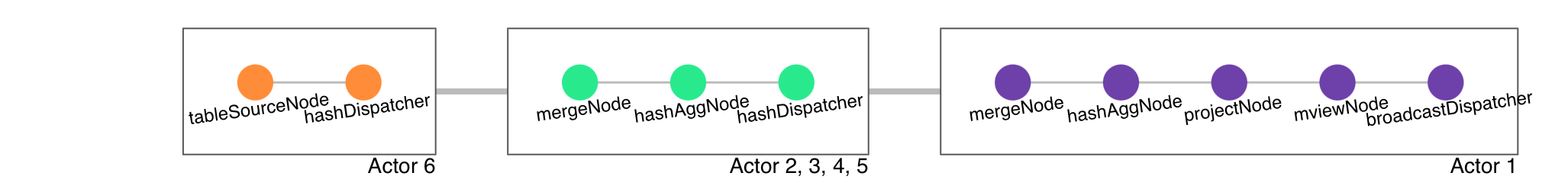

CREATE MATERIALIZED VIEW mv1 AS SELECT SUM(t.quantity) as q FROM t group by t.company;

When the data source (Kafka, e.g.) propagates a bunch of records into the system, the materialized view will refresh automatically.

Assume that we have a sequence [(2, "AMERICA"), (3, "ASIA"), (4, "AMERICA"), (5, "ASIA")]. After the sequence flows through the DAG, the MV will be updated to:

| A | B |

|---|---|

| 6 | AMERICA |

| 8 | ASIA |

When another sequence [(6, "EUROPE"), (7, "EUROPE")] comes, the MV will soon become:

| A | B |

|---|---|

| 6 | AMERICA |

| 8 | ASIA |

| 13 | EUROPE |

mv1 can also act as other MV’s source. For example, mv2, mv3 can reuse the processing results of mv1 thus deduplicating the computation.

The durability of materialized views in RisingWave is built upon a snapshot-based mechanism. Every time a snapshot is triggered, the internal states of each operator will be flushed to S3. Upon failover, the operator recovers from the latest S3 checkpoint.

Since the streaming states can be extremely large, so large that they cannot (or only ineffectively) be held in memory in their entirety, we have designed Hummock to be highly scalable. Compared to Flink’s rocksdb-based state store, Hummock is cloud-native and provides super elasticity.

For more details of our streaming engine, please refer to “An Overview of RisingWave Streaming Engine”.