Introduction

This guide is intended to be used by contributors to learn about how to develop RisingWave. The instructions about how to submit code changes are included in contribution guidelines.

If you have questions, you can search for existing discussions or start a new discussion in the Discussions forum of RisingWave, or ask in the RisingWave Community channel on Slack. Please use the invitation link to join the channel.

To report bugs, create a GitHub issue.

Note: the url was previously for the crate rustdocs, and now they are moved to path /risingwave/rustdoc

Read the design docs

Before you start to make code changes, ensure that you understand the design and implementation of RisingWave. We recommend that you read the design docs listed in docs/README.md first.

You can also read the crate level documentation for implementation details, or run ./risedev doc to read it locally.

Learn about the code structure

- The

srcfolder contains all of the kernel components, refer to src/README.md for more details, which contains more details about Design Patterns in RisingWave. - The

dockerfolder contains Docker files to build and start RisingWave. - The

e2e_testfolder contains the latest end-to-end test cases. - The

docsfolder contains the design docs. If you want to learn about how RisingWave is designed and implemented, check out the design docs here. - The

dashboardfolder contains RisingWave dashboard.

Contribution guidelines

Thanks for your interest in contributing to RisingWave! We welcome and appreciate contributions.

This document describes how to submit your code changes. To learn about the development process, see other chapters of the book. To understand the design and implementation of RisingWave, refer to the design docs listed in docs/README.md.

If you have questions, you can search for existing discussions or start a new discussion in the Discussions forum of RisingWave, or ask in the RisingWave Community channel on Slack. Please use the invitation link to join the channel.

To report bugs, create a GitHub issue.

Find Something to Work On

Issues labeled with good first issue are suitable for new RisingWave hackers.

They are relatively easy to begin with and can guide you getting familiar with one module of RisingWave.

Tests and miscellaneous checks

Before submitting your code changes, ensure you fully test them and perform necessary checks. The testing instructions and necessary checks are detailed in other sections of the book.

Submit a PR

Ask for Review

To get your PR reviewed and merged sooner, you can find and @ mention developers who recently worked on the same files. If you’re not sure who to ask, feel free to reach out to any active developers to help find relevant reviewers. Don’t hesitate to follow up politely if you haven’t received a response, or ask for help in the RisingWave Community Slack channel. We welcome you to be proactive in finding reviewers for your PR!

Pull Request title

As described in here, a valid PR title should begin with one of the following prefixes:

feat: A new featurefix: A bug fixdoc: Documentation only changesrefactor: A code change that neither fixes a bug nor adds a featurestyle: A refactoring that improves code styleperf: A code change that improves performancetest: Adding missing tests or correcting existing testsbuild: Changes that affect the build system or external dependencies (example scopes:.config,.cargo,Cargo.toml)ci: Changes to RisingWave CI configuration files and scripts (example scopes:.github,ci(Buildkite))chore: Other changes that don’t modify src or test filesrevert: Reverts a previous commit

For example, a PR title could be:

refactor: modify executor protobuf package pathfeat(execution): enable comparison between nullable data arrays, where(execution)means that this PR mainly focuses on the execution component.

You may also check out previous PRs in the PR list.

Pull Request description

- If your PR is small (such as a typo fix), you can go brief.

- If it is large and you have changed a lot, it’s better to write more details.

Sign the CLA

Contributors will need to sign RisingWave Labs’ CLA.

Cherry pick the commit to release candidate branch

We have a GitHub Action to help cherry-pick commits from main branch to a release candidate branch, such as v*.*.*-rc where * is a number.

Checkout details at: https://github.com/risingwavelabs/risingwave/blob/main/.github/workflows/cherry-pick-to-release-branch.yml

To trigger the action, we give a correct label to the PR on main branch :

https://github.com/risingwavelabs/risingwave/blob/main/.github/workflows/cherry-pick-to-release-branch.yml#L10

It will act when the PR on main branch merged:

-

If

git cherry-pickdoes not find any conflicts, it will open a PR to therelease candidatebranch, and assign the original author as the reviewer. -

If there is a conflict, it will open an issue and make the original author the assignee.

How to build and run RisingWave

Set up the development environment

macOS

To install the dependencies on macOS, run:

brew install postgresql cmake protobuf tmux cyrus-sasl lld openssl@3 libomp parallel

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

Debian-based Linux

To install the dependencies on Debian-based Linux systems, run:

sudo apt install make build-essential cmake protobuf-compiler curl postgresql-client tmux lld pkg-config libssl-dev libsasl2-dev libblas-dev liblapack-dev libomp-dev parallel

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

If you encounter CMake error when compiling some third-party crates, it may be because the CMake version installed by package manager (ie.

brew/apt) is too high and is incompatible with old CMake files in these crates. You can first uninstall, then manually download the required version from the official source.

nix shell

If you use nix, you can also enter the nix shell via:

nix develop ./develop/nix

All dependencies will be automatically downloaded and configured.

You can also use direnv to automatically enter the nix shell:

direnv allow

Check out flake.nix to read more information!

Then you’ll be able to compile and start RisingWave!

.cargo/config.tomlcontainsrustflagsconfigurations like-Clink-argand-Ctarget-feature. Since it will be merged with$HOME/.cargo/config.toml, check the config files and make sure they don’t conflict if you have globalrustflagsconfigurations for e.g. linker there.

If you want to build RisingWave with Embedded Python UDF feature, you need to install Python 3.12.

To install Python 3.12 on macOS, run:

brew install python@3.12To install Python 3.12 on Debian-based Linux systems, run:

sudo apt install software-properties-common sudo add-apt-repository ppa:deadsnakes/ppa sudo apt-get update sudo apt-get install python3.12 python3.12-devIf the default

python3version is not 3.12, please set thePYO3_PYTHONenvironment variable:export PYO3_PYTHON=python3.12

Start and monitor a dev cluster

RiseDev is the RisingWave developers’ tool. You can now use RiseDev to start a dev cluster. Just run risedev script in the repo’s root directory. It is as simple as:

./risedev d # shortcut for ./risedev dev

psql -h localhost -p 4566 -d dev -U root

The default dev cluster includes meta-node, compute-node and frontend-node processes, and an embedded volatile in-memory state storage. No data will be persisted. This configuration is intended to make it easier to develop and debug RisingWave.

To stop the cluster:

./risedev k # shortcut for ./risedev kill

To view the logs:

./risedev l # shortcut for ./risedev logs

To clean local data and logs:

./risedev clean-data

Tips for compilation

If you detect memory bottlenecks while compiling, either allocate some disk space on your computer as swap memory, or lower the compilation parallelism with CARGO_BUILD_JOBS, e.g. CARGO_BUILD_JOBS=2.

Configure additional components

There are a few additional components supported by RiseDev.

Use the ./risedev configure command to enable and disable components.

- Hummock (MinIO + MinIO-CLI): Enable this component to persist state data.

- Prometheus and Grafana: Enable this component to view RisingWave metrics. You can view the metrics through a built-in Grafana dashboard.

- Postgres/Mysql/Sqlite: Enable this component if you want to persist metadata node data.

- Kafka: Enable this component if you want to create a streaming source from a Kafka topic.

- Grafana Tempo: Use this component for tracing.

Enabling a component with the

./risedev configurecommand will only download the component to your environment. To allow it to function, you must revise the corresponding configuration setting inrisedev.ymland restart the dev cluster.

For example, you can modify the default section to:

default:

- use: minio

- use: meta-node

- use: compute-node

- use: frontend

- use: prometheus

- use: grafana

- use: kafka

persist-data: true

Now you can run ./risedev d to start a new dev cluster. The new dev cluster will contain components as configured in the yaml file. RiseDev will automatically configure the components to use the available storage service and to monitor the target.

You may also add multiple compute nodes in the cluster. The ci-3cn-1fe config is an example.

Configure system variables

You can check src/common/src/config.rs to see all the configurable variables.

If additional variables are needed,

include them in the correct sections (such as [server] or [storage]) in src/config/risingwave.toml.

Start the playground

If you do not need to start a full cluster to develop, you can issue ./risedev p to start the playground, where the metadata node, compute nodes and frontend nodes are running in the same process. Logs are printed to stdout instead of separate log files.

./risedev p # shortcut for ./risedev playground

For more information, refer to README.md under src/risedevtool.

You can also start the playground with cargo directly:

cargo run --bin risingwave -- playground

Then, connect to the playground instance via:

psql -h localhost -p 4566 -d dev -U root

Build Profiles

RisingWave uses Cargo profiles to manage build settings. To briefly introduce Cargo profiles, here is a snippet from the Cargo References:

Profiles provide a way to alter the compiler settings, influencing things like optimizations and debugging symbols.

Cargo has 4 built-in profiles:

dev,release,test, andbench. The profile is automatically chosen based on which command is being run if a profile is not specified on the command-line. In addition to the built-in profiles, custom user-defined profiles can also be specified.

All profiles talked in this document are defined in the Cargo.toml file of the root directory of the project. Please always refer to it for the most up-to-date information.

Built-in Profiles

RisingWave tweaks some settings of the built-in profiles to better fit its needs, in the sections of [profile.<built-in-profile>] in Cargo.toml. For example,

-

dev: for local development and testing- completely disables LTO to speed up the build time

-

release: for local testing with near-production performance- completely disables LTO to speed up the build time

- embeds full debug information to help with debugging in production

Custom Profiles

RisingWave also defines some custom profiles that inherit from the built-in profiles, in the sections of [profile.<custom-profile>] in Cargo.toml. For example,

-

production: for distribution and production deployment- inherits from

release - enables aggressive code optimizations (like LTO) for maximum performance, at the cost of significantly increased build time

- inherits from

-

ci-dev: forpull-requestpipelines in CI- inherits from

dev - tweaks some settings to reduce the build time and binary size

- enables code optimizations for 3rd-party dependencies to improve CI performance

- inherits from

-

ci-release: formainandmain-cronpipelines in CI- inherits from

release - tweaks some settings to reduce the build time and binary size

- enables more runtime checks (like debug assertions and overflow checks) to catch potential bugs

- inherits from

-

ci-sim: formadsimsimulation tests in CI- similar to

ci-dev - enables slight code optimizations for all crates to improve CI performance under single-threaded madsim execution

- similar to

Comparisons

To give a better idea of the differences between the profiles, here is a matrix comparing the profiles:

| Profile | Debug Info | cfg(debug_assertions) | Performance | Build Time |

|---|---|---|---|---|

dev | Full | true | Bad | Fastest |

release | Full | false | Good | Slow |

production | Full | false | Best | Slowest |

ci-dev | Backtrace only | true | Medium | Fast |

ci-release | Backtrace only | true | Good | Slow |

ci-sim | Backtrace only | true | Medium | Medium |

Some miscellaneous notes:

- Compared to “Backtrace only”, “Full” debug information additionally includes the capability to attach a debugger at runtime or on core dumps, to inspect variables and stack traces.

- There are also other subtle differences like incremental compilation settings, overflow checks, and more. They are not listed here for brevity.

cfg(debug_assertions)can be roughly used to determine whether it’s a production environment or not. Note that even thoughci-releasecontainsreleasein its name, thedebug_assertionsare still enabled.

Choose a Profile

By default, RisingWave (and RiseDev) uses the dev profile for local development and testing. To use release profile instead, you can set the corresponding configuration entry by running risedev configure. Other profiles are for their specific use cases and are not meant to be used directly by developers, thus not available with RiseDev.

Testing

Before you submit a PR, fully test the code changes and perform necessary checks.

The RisingWave project enforces several checks in CI. Every time the code is modified, you need to perform the checks and ensure they pass.

- Lint

- Unit and integration tests

- Planner tests

- End-to-end tests

- Fuzzing tests

- DocSlt tests

- Deterministic simulation tests

- Backwards compatibility tests

Lint

RisingWave requires all code to pass fmt, clippy, sort and hakari checks. Run the following commands to install test tools and perform these checks.

./risedev install-tools # Install required tools for running unit tests

./risedev c # Run all checks. Shortcut for ./risedev check

Unit and integration tests

RiseDev runs unit tests with cargo-nextest. To run unit tests:

./risedev test # Run unit tests

Some ideas and caveats for writing tests:

-

Use expect_test to write data driven tests that can automatically update results.

-

It’s recommended to write new tests as integration tests (i.e. in

tests/directory) instead of unit tests (i.e. insrc/directory).Besides, put integration tests under

tests/integration_tests/*.rs, instead oftests/*.rs. See Delete Cargo Integration Tests and #9878, for more details.

You might want to read How to Test for more good ideas on testing.

Planner tests

RisingWave’s SQL frontend has SQL planner tests.

End-to-end tests

We use sqllogictest-rs to run RisingWave e2e tests.

Refer to Sqllogictest .slt Test File Format Cookbook for the syntax.

Before running end-to-end tests, you will need to start a full cluster first:

./risedev d

Then to run the end-to-end tests, you can use one of the following commands according to which component you are developing:

# run all streaming tests

./risedev slt-streaming -p 4566 -d dev -j 1

# run all batch tests

./risedev slt-batch -p 4566 -d dev -j 1

# run both

./risedev slt-all -p 4566 -d dev -j 1

Use

-j 1to create a separate database for each test case, which can ensure that previous test case failure won’t affect other tests due to table cleanups.

Alternatively, you can also run some specific tests:

# run a single test

./risedev slt -p 4566 -d dev './e2e_test/path/to/file.slt'

# run all tests under a directory (including subdirectories)

./risedev slt -p 4566 -d dev './e2e_test/path/to/directory/**/*.slt'

After running e2e tests, you may kill the cluster and clean data.

./risedev k # shortcut for ./risedev kill

./risedev clean-data

RisingWave’s codebase is constantly changing. The persistent data might not be stable. In case of unexpected decode errors, try ./risedev clean-data first.

Fuzzing tests

SqlSmith

Currently, SqlSmith supports for e2e and frontend fuzzing. Take a look at Fuzzing tests for more details on running it locally.

DocSlt tests

As introduced in #5117, DocSlt tool allows you to write SQL examples in sqllogictest syntax in Rust doc comments. After adding or modifying any such SQL examples, you should run the following commands to generate and run e2e tests for them.

# generate e2e tests from doc comments for all default packages

./risedev docslt

# or, generate for only modified package

./risedev docslt -p risingwave_expr

# run all generated e2e tests

./risedev slt-generated -p 4566 -d dev

# or, run only some of them

./risedev slt -p 4566 -d dev './e2e_test/generated/docslt/risingwave_expr/**/*.slt'

These will be run on CI as well.

Deterministic simulation tests

Deterministic simulation is a powerful tool to efficiently search bugs and reliably reproduce them. In case you are not familiar with this technique, here is a talk and a blog post for brief introduction.

See also the blog posts for a detailed writeup:

- Deterministic Simulation: A New Era of Distributed System Testing (Part 1 of 2)

- Applying Deterministic Simulation: The RisingWave Story (Part 2 of 2)

Unit and e2e tests

You can run normal unit tests and end-to-end tests in deterministic simulation mode.

# run deterministic unit test

./risedev stest

# run deterministic end-to-end test

./risedev sslt -- './e2e_test/path/to/directory/**/*.slt'

When your program panics, the simulator will print the random seed of this run:

thread '<unnamed>' panicked at '...',

note: run with `MADSIM_TEST_SEED=1` environment variable to reproduce this error

Then you can reproduce the bug with the given seed:

# set the random seed to reproduce a run

MADSIM_TEST_SEED=1 RUST_LOG=info ./risedev sslt -- './e2e_test/path/to/directory/**/*.slt'

More advanced usages are listed below:

# run multiple times with different seeds to test reliability

# it's recommended to build in release mode for a fast run

MADSIM_TEST_NUM=100 ./risedev sslt --release -- './e2e_test/path/to/directory/**/*.slt'

# configure cluster nodes (by default: 2fe+3cn)

./risedev sslt -- --compute-nodes 2 './e2e_test/path/to/directory/**/*.slt'

# inject failures to test fault recovery

./risedev sslt -- --kill-meta --etcd-timeout-rate=0.01 './e2e_test/path/to/directory/**/*.slt'

# see more usages

./risedev sslt -- --help

Deterministic test is included in CI as well. See CI script for details.

Integration tests

src/tests/simulation contains some special integration tests that are designed to be run in deterministic simulation mode. In these tests, we have more fine-grained control over the cluster and the execution environment to test some complex cases that are hard to test in normal environments.

To run these tests:

./risedev sit-test <test_name>

Sometimes in CI you may see a backtrace, followed by an error message with a MADSIM_TEST_SEED:

161: madsim::sim::task::Executor::block_on

at /risingwave/.cargo/registry/src/index.crates.io-6f17d22bba15001f/madsim-0.2.22/src/sim/task/mod.rs:238:13

162: madsim::sim::runtime::Runtime::block_on

at /risingwave/.cargo/registry/src/index.crates.io-6f17d22bba15001f/madsim-0.2.22/src/sim/runtime/mod.rs:126:9

163: madsim::sim::runtime::builder::Builder::run::{{closure}}::{{closure}}::{{closure}}

at /risingwave/.cargo/registry/src/index.crates.io-6f17d22bba15001f/madsim-0.2.22/src/sim/runtime/builder.rs:128:35

note: Some details are omitted, run with `RUST_BACKTRACE=full` for a verbose backtrace.

context: node=6 "compute-1", task=2237 (spawned at /risingwave/src/stream/src/task/stream_manager.rs:689:34)

note: run with `MADSIM_TEST_SEED=2` environment variable to reproduce this error

You may use that to reproduce it in your local environment. For example:

MADSIM_TEST_SEED=4 ./risedev sit-test test_backfill_with_upstream_and_snapshot_read

Backwards compatibility tests

This tests backwards compatibility between the earliest minor version and latest minor version of Risingwave (e.g. 1.0.0 vs 1.1.0).

You can run it locally with:

./risedev backwards-compat-test

In CI, you can make sure the PR runs it by adding the label ci/run-backwards-compat-tests.

Debugging

Debug playground using vscode

To step through risingwave locally with a debugger you can use the launch.json and the tasks.json provided in vscode_suggestions. After adding these files to your local .vscode folder you can debug and set breakpoints by launching Launch 'risingwave p' debug.

Observability

RiseDev supports several observability components.

Cluster Control

risectl is the tool for providing internal access to the RisingWave cluster. See

cargo run --bin risectl -- --help

… or

./risedev ctl --help

for more information.

Monitoring

Uncomment grafana and prometheus lines in risedev.yml to enable Grafana and Prometheus services.

Tracing

Compute nodes support streaming tracing. Tracing is not enabled by default. You need to

use ./risedev configure to download the tracing components first. After that, you will need to uncomment tempo

service in risedev.yml and start a new dev cluster to allow the components to work.

Traces are visualized in Grafana. You may also want to uncomment grafana service in risedev.yml to enable it.

Dashboard

You may use RisingWave Dashboard to see actors in the system. It will be started along with meta node, and available at http://127.0.0.1:5691/ .

The development instructions for dashboard are available here.

Logging

The Rust components use tokio-tracing to handle both logging and tracing. The default log level is set as:

- Third-party libraries: warn

- Other libraries: debug

To configure log levels, launch RisingWave with the environment variable RUST_LOG set as described here.

There’re also some logs designated for debugging purposes with target names starting with events:: .

For example, by setting RUST_LOG=events::stream::message::chunk=trace , all chunk messages will be logged as it passes through the executors in the streaming engine. Search in the codebase to find more of them.

Metrics

The contents of this document may be subject to frequent change. It covers what each metric measures, and what information we may derive from it.

Barrier Latency

Prerequisite: Checkpoint

This metric measures the duration from which a barrier is injected into all sources in the stream graph, to the barrier flown through all executors in the graph.

What can we understand from it?

Usually when examining barrier latency, we look at high barrier latency.

There are two contributing factors to it:

- Time taken to actually process the streaming messages.

- Buffer capacity for streaming messages.

Processing costs

When injecting a new barrier, there will usually be streaming messages in the stream graph (unless it’s the initial barrier). Since we keep total order for streaming messages, this means that all streaming messages currently in the stream graph have to be processed before the barrier can pass through. If barrier latency is high, it could mean a long time is taken to process these streaming messages. Concretely, here are some costs of processing streaming messages:

- CPU cost of evaluating expressions.

- I/O remote exchange between fragments.

- Stateful Executor cache-miss (for instance hash-join and hash-agg). This results in extra costs to access state on s3.

Buffer capacities

Next, high barrier latency could also be caused by buffers in the graph. If some downstream buffer is congested, we will be unable to queue and continue processing upstream messages.

For instance, if the channel in the exchange executor is full, upstream messages cannot be sent through this channel. This means the upstream executor will be unable to continue processing new stream messages, until some space on the buffer is freed.

The various buffer sizes can currently be adjusted via options in the developer configuration file. For instance, options to configure buffer size of the exchange executor can be found here.

Another subtle cause is that large buffer size can also worsen barrier latency. Suppose stream message processing is at its limit, and there’s high latency as a result. Typically, backpressure kicks in, the source is throttled. If buffer sizes are too large, or if there are many buffers, there will not be backpressure applied to source immediately. During this delay, we will continue to see high barrier latency. A heuristic algorithm is on the way to deal with this: https://github.com/risingwavelabs/risingwave/issues/8654.

CPU Profiling Guide

Profiling on host

Share an easy-to-use profiler and flamegraph tool: https://github.com/koute/not-perf.git

Record samples:

nperf record -p `pidof compute-node` -o perf.data

Generate flamegraph:

nperf flamegraph --merge-threads perf.data > perf.svg

Profiling remote compute nodes

You can profile remote compute nodes from a local machine by simply type the following command.

./risedev ctl profile cpu --sleep [seconds]

All compute nodes will be profile for a given seconds time and generated flame graph will be transferred to your local machine .risingwave/profiling/.

Note: To profile compute nodes remotely, please make sure all remote nodes have a public IP address accessible from your local machine (where you are running risedev).

CPU Profiling on OSX

Get the pid of the node you want to profile.

pgrep compute-node

Use cargo flamegraph:

cargo install flamegraph

sudo flamegraph -o flamegraph.svg --pid [pid]

When you’re satisfied, you can Ctrl+C to stop the profiler.

open flamegraph.svg

Memory (Heap) Profiling Guide

Note that the content below is Linux-exclusive.

What is Heap Profile?

A heap profiler records the stack trace of the allocation of each live object, so it’s possible that function A allocates something and then hand over it to struct B, in this case, the allocation will still be counted on A.

Internals

RisingWave uses tikv-jemallocator on Linux, which is a Rust wrapper of jemalloc, as its memory allocator. On other platforms, RisingWave uses the default allocator.

Luckily, jemalloc provides built-in profiling support (official wiki). jemallocator exposes the feature via a cargo feature ‘profiling’. Here is a simple guide to profiling with jemallocator.

For RisingWave, feat: support heap profiling from risedev by fuyufjh · Pull Request #4871 added all things needed. Please just follow the below steps.

Step 1 - Collect Heap Dump

Depends on the deployment, check the corresponding section to read the instructions.

Manually profile RisingWave

1. Profile RisingWave (locally) with risedev

Run a local cluster in EC2 instance with an additional environment variable RISEDEV_ENABLE_HEAP_PROFILE.

RISEDEV_ENABLE_HEAP_PROFILE=1 ./risedev d full

Under the hood, risedev set environment variable MALLOC_CONF for RisingWave process. Here is the implementation.

By default, the profiler will output a profile result on every 4GB memory allocation. Running a query and waiting for a while, lots of .heap files will be generated in the current folder:

...

compactor.266308.15.i15.heap

compactor.266308.16.i16.heap

compactor.266308.17.i17.heap

compactor.266308.18.i18.heap

...

compute-node.266187.116.i116.heap

compute-node.266187.117.i117.heap

compute-node.266187.118.i118.heap

compute-node.266187.119.i119.heap

...

2. Profile RisingWave in testing pipelines

Currently, some testing pipelines such as longevity tests have enabled memory profiling by default, but some are not, such as performance benchmarks.

To enable heap profiling of compute nodes in benchmark pipelines, set environment variable when starting a job:

ENABLE_MEMORY_PROFILING=true

Under the hood, the pipeline script passes the value to kube-bench’s parameter benchmark.risingwave.compute.memory_profiling.enable (code here, and then kube-bench sets the environment to RisingWave Pods (code here).

Note that this is only for compute nodes. If you need to run profiling on other nodes, or need to tune the parameters of profiling, you may modify the parameters in risingwave-test’s env.override.toml manually and run the job with that branch. (Example)

3. Profile RisingWave in Kubernetes/EKS

If you run into an OOM issue in Kukernetes, now you will need to enable memory profiling first and reproduce the problem.

To enable memory profiling, set the environment variables MALLOC_CONF to Pods.

# Example: `statefulsets` for CN and Meta

kubectl edit statefulsets/benchmark-risingwave-compute-c

# Example: `deployments` for other nodes

kubectl edit deployments/benchmark-risingwave-connector-c

Add the MALLOC_CONF env var. Note the prof_prefix is used to specify the path and file names of dump. By default, /risingwave/cache/ is mounted to HostPath and will persist after Pod restarts, so we use it as dump path here.

env:

- name: MALLOC_CONF

value: prof:true,lg_prof_interval:38,lg_prof_sample:19,prof_prefix:/risingwave/cache/cn

The suggested values of lg_prof_interval are different for different nodes. See risedev code: compactor_service, compute_node_service.rs, meta_node_service.rs.

Afterwards, the memory dump should be outputted to the specified folder. Use kubectl cp to download it to local.

4. Dump memory profile with risectl

You can dump a heap profile with risectl for any worker node that has Jemalloc profiling enabled (MALLOC_CONF=prof:true).

./risedev ctl profile heap --dir [dumped_file_dir] \

--worker-type frontend \

--worker-type compute-node \

--worker-type compactor

--worker-type is repeatable. If omitted, it defaults to all worker types that support heap profiling, i.e. frontend, compute-node, and compactor.

The dumped files will be saved in the directory you specified.

Note: To profile nodes remotely via risectl, make sure all remote nodes have a public IP address accessible from your local machine (where you are running risedev).

Dump memory profile with dashboard on Cloud

For Cloud deployments, the necessary configuration is already done, and you can directly dump heap profiles from the Heap Profiling page:

- Open the dashboard (e.g.

http://<meta-host>:5691/if served by meta node, orhttp://localhost:3000/in dev mode). - Go to the Heap Profiling page.

- Select a worker node and click Dump.

The dumped files will be saved on the target node.

Besides manual dumps, RisingWave deployments on Cloud will also auto dump heap profiles when the process memory usage crosses a configured threshold. See [server.heap_profiling] section in the configuration file.

Step 2 - Analyze Heap Dump

After you have the heap dump files, you can analyze them with jeprof to generate a collapsed file for visualization.

Note that each of the .heap files are full snapshots instead of increments. Hence, simply pick the latest file (or any historical snapshot).

Manually use jeprof to analyze heap dump

jeprof is a utility provided by jemalloc to analyze heap dump files. It reads both the executable binary and the heap dump to get a full heap profiling.

Note that the heap profiler dump file must be analyzed along with exactly the same binary that it generated from. If the memory dump is collected from Kubernetes, please refer to 2. Use jeprof in Docker images.

1. Use jeprof locally

jeprof is already compiled in jemallocator and should be compiled by cargo, use it as follows:

# find jeprof binary

find . -name 'jeprof'

# set execution permission

chmod +x ./target/release/build/tikv-jemalloc-sys-22f0d47d5c562226/out/build/bin/jeprof

Faster jeprof (recommend)

In some platforms jeprof runs very slow. The bottleneck is addr2line, if you want to speed up from 30 minutes to 3s, please use :

git clone https://github.com/gimli-rs/addr2line

cd addr2line

cargo b --examples -r

cp ./target/release/examples/addr2line <your-path>

2. Use jeprof in Docker images

jeprof is included in RisingWave image v1.0.0 or later. For earlier versions, please copy an jeprof manually into the container.

Find a Linux machine and use docker command to start an environment with the specific RisingWave version. Here, -v $(pwd):/dumps mounts current directory to /dumps folder inside the container, so that you don’t need to copy the files in and out.

docker run -it --rm --entrypoint /bin/bash -v $(pwd):/dumps ghcr.io/risingwavelabs/risingwave:latest

After you have jeprof ready, you can use it to generate collapsed file.

jeprof --collapsed binary_file heap_file > heap_file.collapsed

For example:

jeprof --collapsed /risingwave/bin/risingwave jeprof.198272.123.i123.heap > jeprof.198272.123.i123.heap.collapsed

Analyze with dashboard on Cloud

For Cloud deployments, the necessary toolchain is already set up for you. Simply analyze heap dump files from the Heap Profiling page in the dashboard:

- Open the dashboard (e.g.

http://<meta-host>:5691/if served by meta node, orhttp://localhost:3000/in dev mode). - Go to the Heap Profiling page.

- Select a worker node, choose the dump source (Auto or Manually) and a dump file.

- Click Analyze to download the generated

.collapsedfile.

Step 3 - Visualize Flame Graph

We recommend you to analyze collapsed file with speedscope. Just drop the .collapsed file into it. Click Left Heavy in the top-left corner to merge shared calling stacks.

Alternative: Generate flame graph locally

Download and unarchive FlameGraph utility.

Run

./flamegraph.pl --color=mem --countname=bytes heap_file.collapsed > flamegraph.svg

Example:

./flamegraph.pl --color=mem --countname=bytes jeprof.198272.4741.i4741.collapsed > flamegraph.svg

By the way, the step 2 and 3 can be written in one line with pipe:

jeprof --collapsed target/release/risingwave compute-node.10404.2466.i2466.heap | ~/FlameGraph/flamegraph.pl --color=mem --countname=bytes > flamegraph.svg

Micro Benchmarks

We have micro benchmarks for various components such as storage, stream and batch executors.

Running Micro Benchmarks

You can run them by specifying their name.

For instance to run json_parser micro benchmark:

cargo bench json_parser

Generating Flamegraph for Micro Benchmarks

Note: Flamegraph generation depends on

perf. You will need a linux box to run it.

-

Install

cargo-flamegraphcargo install flamegraph -

Install

perf. If on ubuntu:sudo apt install linux-toolsIf using EC2, you may need this instead:

sudo apt install linux-tools-awsOn an EC2 instance you may also need to set

paranoidlevel to 1, to give the profiler necessary permissions.sudo sh -c "echo 1 >/proc/sys/kernel/perf_event_paranoid" -

Run flamegraph + benchmark (change

json_parserto whichever benchmark you want to run.)cargo flamegraph --bench json_parser -- --benchWithin a benchmark, there are also typically multiple benchmark groups. For instance, within the

json_parserbench, there’sjson_parser,debezium_json_parser_(create/read/update/delete)To filter you can just append a regex. For instance to only benchjson_parser:cargo flamegraph --bench json_parser -- --bench ^json_parserYou can take a look at Criterion Docs for more information.

Develop Connectors

This page describes the development workflow to develop connectors. For design docs, see

RisingWave supports a lot of connectors (sources and sinks). However, developing connectors is tricky because it involves external systems:

- Before developing and test, it’s troublesome to set up the development environment

- During testing, we need to seed the external system with test data (perhaps with some scripts)

- The test relies on the configuration of the setup. e.g., the test needs to know the port of your Kafka in order to

- We need to do the setup for both CI and local development.

Our solution is: we resort to RiseDev, our all-in-one development tool, to help manage external systems and solve these problems.

Before going to the specific methods described in the sections below, the principles we should follow are:

- environment-independent: It should easy to start cluster and run tests on your own machine, other developers’

machines, and CI.

- Don’t use hard-coded configurations (e.g.,

localhost:9092for Kafka). - Don’t write too many logic in

ci/scripts. Let CI scripts be thin wrappers.

- Don’t use hard-coded configurations (e.g.,

- self-contained tests: It should be straightforward to run one test case, without worrying about where is the script

to prepare the test.

- Don’t put setup logic, running logic and verification logic of a test in different places.

Reference: for the full explanations of the difficulies and the design of our solution, see here.

The following sections first walk you through what is the development workflow for existing connectors, and finally explain how to extend the development framework to support a new connector.

- Set up the development environment

- End-to-end tests

- Adding a new connector to the development framework

Set up the development environment

RiseDev supports starting external connector systems (e.g., Kafka, MySQL) just like how it starts the RisingWave cluster, and other standard systems used as part of the RisingWave Cluster (e.g., MinIO, etcd, Grafana).

You write the profile in risedev.yml (Or risedev-profiles.user.yml ), e.g., the following config includes Kafka and

MySQL, which will be used to test sources.

my-cool-profile:

steps:

# RisingWave cluster

- use: minio

- use: sqlite

- use: meta-node

meta-backend: sqlite

- use: compute-node

- use: frontend

- use: compactor

# Connectors

- use: kafka

address: message_queue

port: 29092

- use: mysql

port: 3306

address: mysql

user: root

password: 123456

Then

# will start the cluster along with Kafka and MySQL for you

risedev d my-cool-profile

For all config options of supported systems, check the comments in template section of risedev.yml .

Escape hatch: user-managed mode

user-managed is a special config. When set to true , you will need to start the system by yourself. You may wonder

why bother to add it to the RiseDev profile if you start it by yourself. In this case, the config will still be loaded

by RiseDev, which will be useful in tests. See chapters below for more details.

The user-managed mode can be used as a workaround to start a system that is not yet supported by RiseDev, or is buggy.

It’s also used to config the CI profile. (In CI, all services are pre-started by ci/docker-compose.yml )

Example of the config:

- use: kafka

user-managed: true

address: message_queue

port: 29092

End-to-end tests

The e2e tests are written in slt files. There are 2 main points to note:

- Use

system okto runbashcommands to interact with external systems. Use this to prepare the test data, and verify the results. The whole lifecycle of a test case should be written in the samesltfile. - Use

control substitution onand then use environment variables to specify the config of the external systems, e.g., the port of Kafka.

Refer to

the sqllogictest-rs documentation

for the details of system and substitution .

Take Kafka as an example about how to the tests are written:

When you use risedev d to start the external services, related environment variables for Kafka will be available when

you run risedev slt:

RISEDEV_KAFKA_BOOTSTRAP_SERVERS="127.0.0.1:9092"

RISEDEV_KAFKA_WITH_OPTIONS_COMMON="connector='kafka',properties.bootstrap.server='127.0.0.1:9092'"

RPK_BROKERS="127.0.0.1:9092"

The slt test case looks like this:

control substitution on

# Note: you can also use envvars in `system` commands, but usually it's not necessary since the CLI tools can load envvars themselves.

system ok

rpk topic create my_source -p 4

# Prepared test topic above, and produce test data now

system ok

cat << EOF | rpk topic produce my_source -f "%p %v\n" -p 0

0 {"v1": 1, "v2": "a"}

1 {"v1": 2, "v2": "b"}

2 {"v1": 3, "v2": "c"}

EOF

# Create the source, connecting to the Kafka started by RiseDev

statement ok

create source s0 (v1 int, v2 varchar) with (

${RISEDEV_KAFKA_WITH_OPTIONS_COMMON},

topic = 'my_source',

scan.startup.mode = 'earliest'

) FORMAT PLAIN ENCODE JSON;

See src/risedevtool/src/risedev_env.rs for variables supported for each service.

Note again: You need to use

risedev dto start the cluster, and then userisedev sltto run the tests. It doesn’t work if you start the cluster by yourself without telling RiseDev, or you use rawsqllogictestbinary directly.How it works:

risedev dwill write env vars to.risingwave/config/risedev-env, andrisedev sltwill load env vars from this file.

Tips for writing system commands

Refer to the sqllogictest-rs documentation for the syntax.

For simple cases, you can directly write a bash command, e.g.,

system ok

mysql -e "

DROP DATABASE IF EXISTS testdb1; CREATE DATABASE testdb1;

USE testdb1;

CREATE TABLE tt1 (v1 int primary key, v2 timestamp);

INSERT INTO tt1 VALUES (1, '2023-10-23 10:00:00');

"

system ok

cat << EOF | rpk topic produce my_source -f "%p %v\n" -p 0

0 {"v1": 1, "v2": "a"}

1 {"v1": 2, "v2": "b"}

2 {"v1": 3, "v2": "c"}

EOF

For more complex cases, you can write a test script, and invoke it in slt. Scripts can be written in any language you

like, but kindly write a README.md to help other developers get started more easily.

-

For ad-hoc scripts (only used for one test), it’s better to put next to the test file.

e.g.,

e2e_test/source_inline/kafka/consumer_group.mjs, which is invoked byconsumer_group.sltnext to it. -

For general scripts that can be used under many situations, put it in

e2e_test/commands/. This directory will be loaded inPATHbyrisedev slt, and thus function as kind of “built-in” commands.A common scenario is when a CLI tool does not accept envvars as arguments. In such cases, instead of manually specifying the arguments each time invoking it in

slt, you can create a wrapper to handle this implicitly, making it more concise.e2e_test/commands/mysqlis a good demonstration.

Tips for debugging:

-

Use

echoto check whether the environment is correctly set.system ok echo $PGPORT ---- placeholderThen running

risedev sltwill return error “result mismatch”, and shows what’s the output of theechocommand, i.e., the value ofPGPORT. -

Use

risedev show-risedev-envto see the environment variables available forrisedev slt, after you starting the cluster withrisedev d.

Adding a new connector to the development framework

Refer to #16449 ( user-managed only MySQL),

and #16514 (Docker based MySQL) as examples.

- Add a new service in

templatesection ofrisedev.yml. And add corresponding config insrc/risedevtool/src/service_config.rs. - Implement the new service task, and add it to

src/risedevtool/src/bin/risedev-dev.rs. - Add environment variables you want to use in the

slttests insrc/risedevtool/src/risedev_env.rs. - Write tests according to the style explained in the previous section.

Source

This page describes RisingWave’s Data Source API and the architecture behind it. This may help if you are interested in how data sources work, or if you want to implement a new Data Source.

For the workflow of developing connectors, see Develop Connectors.

Components

RisingWave’s data source covers four parts: connectors, enumerators, ConnectorSource and SourceExecutor.

Connectors

Connector serves as an interface to upstream data pipeline, including the message queue and file system. In the current design, it can only have a limited concurrency. One connector instance only reads from one split from the upstream. For example, if upstream is a Kafka and it has three partitions so, in RisingWave, there should be three connectors.

All connectors need to implement the following trait and it exposes two methods to the upper layer.

#![allow(unused)]

fn main() {

// src/connector/src/base.rs

pub trait SplitReader: Sized {

type Properties;

async fn new(

properties: Self::Properties,

state: ConnectorState,

columns: Option<Vec<Column>>,

) -> Result<Self>;

async fn next(&mut self) -> Result<Option<Vec<SourceMessage>>>;

}

}new: create a new connector with some properties, and this method should support restoring from a specific state(with partitions and offsets).next: return a batch of new messages and their offsets in the split.

Enumerators

Enumerator periodically requests upstream to discover changes in splits, and in most cases the number of splits only increases. The enumerator is a separate task that runs on the meta. If the upstream split changes, the enumerator notifies the connector by means of config change to change the subscription relationship.

All enumerators need to implement the following trait.

#![allow(unused)]

fn main() {

// src/connector/src/base.rs

pub trait SplitEnumerator: Sized {

type Split: SplitMetaData + Send + Sync;

type Properties;

async fn new(properties: Self::Properties) -> Result<Self>;

async fn list_splits(&mut self) -> Result<Vec<Self::Split>>;

}

}new: creates an enumerator with some properties.list_splits: requests the upstream and returns all partitions.

ConnectorSource

ConnectorSource unites all connectors via SourceReader trait. Also, a parser is held here, which parses raw data to stream chunks according to column description. A ConnectorSource can handle multiple splits by spawning a new thread for each split. If the source is assigned no split, it will start a dummy reader whose next method never returns as a placeholder.

SourceExecutor

SourceExecutor is initialized with splits assigned by the enumerator to subscribe. The channel of the data chunks and the channel of the barrier are combined at this level and the SourceExecutor needs to prioritize and correctly handle the barriers.

How It Works

- When a source is defined, meta service will register its schema and broadcast to compute nodes. Compute node extracts properties from the frontend and builds corresponding components and stores them as

SourceDescinsource_manageridentified by table_id. Note that at this stage, the source instance is only built but not running. - No

SourceExecutorwill be built until a subsequent materialized view is created.SourceExecutorfetches specific source instance fromsource_manageridentified by table_id and holds a copy of it, and initializes the corresponding state store at this stage. - When receiving a barrier, SourceExecutor will check whether it contains an assign_split mutation. If the partition assignment in the assign_split mutation is different from the current situation, the

SourceExecutorneeds to rebuild theConnectorSourceand other underlying services based on the information in the mutation, then starts reading from the new split and offset. - Whenever receiving a barrier, the state handler always takes a snapshot of the

ConnectorSourcethen labels the snapshot with an epoch number. When an error occurs, SourceExecutor takes a specific state and applies it.

Continuous Integration

CI Labels Guide

[ci/run-xxx ...]: Run additional steps in the PR workflow indicated byci/run-xxxin your PR.ci/pr/run-selected+[ci/run-xxx ...]: Only run selected steps indicated byci/run-xxxin your DRAFT PR.ci/main-cron/run-all: Run fullmain-cronworkflow for your PR.ci/main-cron/run-selected+[ci/run-xxx …]: Run specific steps indicated byci/run-xxxfrom themain-cronworkflow, in your PR. Can use to verify somemain-cronfix works as expected.- To reference

[ci/run-xxx ...]labels, you may look at steps frompull-request.ymlandmain-cron.yml.

Example

https://github.com/risingwavelabs/risingwave/pull/17197

To run e2e-test and e2e-source-test for main-cron in your pull request:

- Add

ci/run-e2e-test. - Add

ci/run-e2e-source-tests. - Add

ci/main-cron/run-selectedto skip all other steps which were not selected withci/run-xxx.

Main Cron Bisect Guide

- Create a new build via buildkite

- Add the following environment variables:

GOOD_COMMIT: The good commit hash.BAD_COMMIT: The bad commit hash.BISECT_BRANCH: The branch name where the bisect will be performed.CI_STEPS: TheCI_STEPSto run during the bisect. Separate multiple steps with a comma.- You can check the labels for this in main-cron.yml, under the conditions for each step.

Example you can try on buildkite:

- Environment variables:

GOOD_COMMIT=29791ddf16fdf2c2e83ad3a58215f434e610f89a BAD_COMMIT=7f36bf17c1d19a1e6b2cdb90491d3c08ae8b0004 BISECT_BRANCH=kwannoel/test-bisect CI_STEPS="test-bisect,disable-build"

Architecture Design

Motivation

This document serves as one of the materials for newcomers to learn the high-level architecture and the functionalities of each component.

Architecture

There are currently 4 types of nodes in the cluster:

- Frontend: Frontend is a stateless proxy that accepts user queries through Postgres protocol. It is responsible for parsing, validation, optimization, and answering the results of each individual query.

- ComputeNode: ComputeNode is responsible for executing the optimized query plan.

- Compactor: Compactor is a stateless worker node responsible for executing the compaction tasks for our storage engine.

- MetaServer: The central metadata management service. It also acts as a failure detector that periodically sends heartbeats to frontends and compute-nodes in the cluster. There are multiple sub-components running in MetaServer:

- ClusterManager: Manages the cluster information, such as the address and status of nodes.

- StreamManager: Manages the stream graph of RisingWave.

- CatalogManager: Manage table catalog in RisingWave. DDL goes through catalog manager and catalog updates will be propagated to all frontend nodes in an async manner.

- BarrierManager: Manage barrier injection and collection. Checkpoint is initiated by barrier manager regularly.

- HummockManager: Manages the SST file manifest and meta-info of Hummock storage.

- CompactionManager: Manages the compaction status and task assignment of Hummock storage.

The topmost component is the Postgres client. It issues queries through TCP-based Postgres wire protocol.

The leftmost component is the streaming data source. Kafka is the most representative system for streaming sources. Alternatively, Redpanda, Apache Pulsar, AWS Kinesis, Google Pub/Sub are also widely-used. Streams from Kafka will be consumed and processed through the pipeline in the database.

The bottom-most component is AWS S3, or MinIO (an open-sourced s3-compatible system). We employed a disaggregated architecture in order to elastically scale the compute-nodes without migrating the storage.

Execution Mode

There are 2 execution modes in our system serving different analytics purposes.

Batch-Query Mode

The first is the batch-query mode. Users issue such a query via a SELECT statement and the system answers immediately. This is the most typical RDBMS use case.

Let’s begin with a simple SELECT and see how it is executed.

SELECT SUM(t.quantity) FROM t group by t.company;

The query will be sliced into multiple plan fragments, each being an independent scheduling unit and probably with different parallelism. For simplicity, parallelism is usually set to the number of CPU cores in the cluster. For example, if there are 3 compute-nodes in the cluster, each with 4 CPU cores, then the parallelism will be set to 12 by default.

Each parallel unit is called a task. Specifically, PlanFragment 2 will be distributed as 4 tasks to 4 CPU cores.

Behind the TableScan operator, there’s a storage engine called Hummock that stores the internal states, materialized views, and the tables. Note that only the materialized views and tables are queryable. The internal states are invisible to users.

To know more about Hummock, you can check out “An Overview of RisingWave State Store”.

Streaming Mode

The other execution mode is the streaming mode. Users build streaming pipelines via CREATE MATERIALIZED VIEW statement. For example:

CREATE MATERIALIZED VIEW mv1 AS SELECT SUM(t.quantity) as q FROM t group by t.company;

When the data source (Kafka, e.g.) propagates a bunch of records into the system, the materialized view will refresh automatically.

Assume that we have a sequence [(2, "AMERICA"), (3, "ASIA"), (4, "AMERICA"), (5, "ASIA")]. After the sequence flows through the DAG, the MV will be updated to:

| A | B |

|---|---|

| 6 | AMERICA |

| 8 | ASIA |

When another sequence [(6, "EUROPE"), (7, "EUROPE")] comes, the MV will soon become:

| A | B |

|---|---|

| 6 | AMERICA |

| 8 | ASIA |

| 13 | EUROPE |

mv1 can also act as other MV’s source. For example, mv2, mv3 can reuse the processing results of mv1 thus deduplicating the computation.

The durability of materialized views in RisingWave is built upon a snapshot-based mechanism. Every time a snapshot is triggered, the internal states of each operator will be flushed to S3. Upon failover, the operator recovers from the latest S3 checkpoint.

Since the streaming states can be extremely large, so large that they cannot (or only ineffectively) be held in memory in their entirety, we have designed Hummock to be highly scalable. Compared to Flink’s rocksdb-based state store, Hummock is cloud-native and provides super elasticity.

For more details of our streaming engine, please refer to “An Overview of RisingWave Streaming Engine”.

An Overview of the RisingWave Streaming Engine

Overview

RisingWave provides real-time analytics to serve user’s need. This is done by defining materialized views (MV). All materialized views will be automatically refreshed according to recent updates, such that querying materialized views will reflect real-time analytical results. Such refreshing is carried out by our RisingWave streaming engine.

The core design principles of the RisingWave streaming engine are summarized as follows.

- Actor model based execution engine. We create a set of actors such that each actor reacts to its own input message, including both data update and control signal. In this way we build a highly concurrent and efficient streaming engine.

- Shared storage for states. The backbone of the state storage is based on shared cloud object storage (currently AWS S3), which gives us computational elasticity, cheap and infinite storage capacity, and simplicity during configuration change.

- Everything is a table, everything is a state. We treat every object in our internal storage as both a logical table and an internal state. Therefore, they can be effectively managed by catalog, and be updated in a unified streaming engine with consistency guarantee.

In this document we give an overview of the RisingWave streaming engine.

Architecture

The overall architecture of RisingWave is depicted in the figure above. In brief, RisingWave streaming engine consists of three sets of nodes: frontend, compute nodes, and meta service. The frontend node consists of the serving layer, handling users’ SQL requests concurrently. Underlying is the processing layer. Each compute node hosts a collection of long-running actors for stream processing. All actors access a shared persistence layer of storage (currently AWS S3) as its state storage. The meta service maintains all meta-information and coordinates the whole cluster.

When receiving a create materialized view statement at the frontend, a materialized view and the corresponding streaming pipeline are built in the following steps.

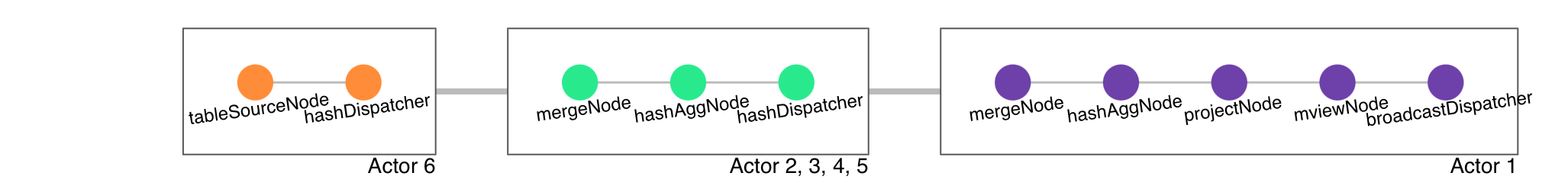

- Building a stream plan. Here a stream plan is a logical plan which consists of logical operators encoding the dataflow. This is carried out by the streaming planner at the frontend.

- Fragmentation. The stream fragmenter at the meta service breaks the generated logical stream plan into stream fragments, and duplicates such fragments when necessary. Here a stream fragment holds partial nodes from the stream plan, and each fragment can be parallelized by building multiple actors for data parallelization.

- Scheduling plan fragments. The meta service distributes different fragments into different compute nodes and let all compute nodes build their local actors.

- Initializing the job at the backend. The meta service notifies all compute nodes to start serving streaming pipelines.

Actors, executors, and states

Actors

Actors are the minimal unit to be scheduled in the RisingWave streaming engine, such that there is no parallelism inside each actor. The typical structure of an actor is depicted on the right of the figure above. An actor consists of three parts.

- Merger (optional). Each merger merges the messages from different upstream actors into one channel, such that the executors can handle messages sequentially. The merger is also in charge of aligning barriers to support checkpoints (details described later).

- A chain of executors. Each executor is the basic unit of delta computation (details described later).

- Dispatcher (optional). Each dispatcher will send its received messages to different downstream actors according to certain strategies, e.g. hash shuffling or round-robin.

The execution of actors is carried out by tokio async runtime. After an actor starts running, it runs an infinite loop in which it continuously runs async functions to generate outputs, until it receives a stop message.

Messages between two local actors are transferred via channels. For two actors located on different compute nodes, messages are re-directed to an exchange service. The exchange service will continuously exchange messages with each other via RPC requests.

Executors

Executors are the basic computational units in the streaming engine. Each executor responds to its received messages and computes an output message atomically, i.e the computation inside each executor will not be broken down.

The underlying algorithmic framework of the RisingWave streaming system is the traditional change propagation framework. Given a materialized view to be maintained, we build a set of executors where each executor corresponds to a relational operator (including base table). When any of the base tables receive an update, the streaming engine computes the changes to each of the materialized views by recursively computing the update from the leaf to the root. Each node receives an update from one of its children, computes the local update, and propagates the update to its parents. By guaranteeing the correctness of every single executor, we get a composable framework for maintaining arbitrary SQL queries.

Checkpoint, Consistency, and Fault tolerance

We use the term consistency to denote the model of the completeness and correctness of querying materialized views. We follow the consistency model introduced in Materialize. More specifically, the system assures that the query result is always a consistent snapshot of a certain timestamp t before the query issued a timestamp. Also, later queries always get consistent snapshots from a later timestamp. A consistent snapshot at t requires that all messages no later than t are reflected in the snapshot exactly once while all messages after t are not reflected.

Barrier based checkpoint

To guarantee consistency, RisingWave introduces a Chandy-Lamport style consistent snapshot algorithm as its checkpoint scheme.

This procedure guarantees that every state to be flushed into the storage is consistent (matching a certain barrier at the source). Therefore, when querying materialized views, consistency is naturally guaranteed when the batch engine reads a consistent snapshot (of views and tables) on the storage. We also call each barrier an epoch and sometimes use both terms interchangeably as data streams are cut into epochs. In other words, the write to the database is visible only after it has been committed to the storage via the checkpoint.

To improve the efficiency, all dirty states on the same compute node are gathered to a shared buffer, and the compute node asynchronously flushes the whole shared buffer into a single SST file in the storage, such that the checkpoint procedure shall not block stream processing.

See more detailed descriptions on Checkpoint.

Fault tolerance

When the streaming engine crashes down, the system must globally rollback to a previous consistent snapshot. To achieve this, whenever the meta detects the failover of some certain compute node or any undergoing checkpoint procedure, it triggers a recovery process. After rebuilding the streaming pipeline, each executor will reset its local state from a consistent snapshot on the storage and recover its computation.

Checkpoint

Revisit: Consistency Model

Similar to other relational databases, RisingWave provides consistent snapshot reads on both tables and materialized views. Specifically,

- Consistency. Given a query Q and the latest event timestamp t, the system returns a result Q(t′) such that Q(t′) is consistent on a timestamp t′≤t , i.e. evaluating Q over a snapshot S(t′) from a previous timestamp t′≤t, where S(t′) is the set of all tuples presented before timestamp t′. That is, the system delivers a query result that is consistent with a previous timestamp t′.

- Monotonicity. For any two timestamps t1 and t2 such that t1<t2, assume the result for Q on t1 and t2 are consistent with snapshots on t1′ and t2′ respectively, then t1′<t2′. That is, later queries should not return results that correspond to earlier snapshots.

Note that RisingWave does not guarantee a write must be visible to subsequence reads, a.k.a. the read-after-write consistency. Users may use the FLUSH command to make sure the changes have taken effect before reads.

Internally, the upcoming changes may take a while to propagate from sources to materialized views, and at least one barrier event is required to flush the changes. Such two kinds of latency determine the latency between write and read.

Streaming Checkpoint

The consistent checkpoints play 2 roles in our system.

- Fault-tolerance. To recover the cluster from an unexpected failure, every stateful streaming operator needs to recover their states from a consistent checkpoint.

- Consistent snapshot. The snapshot to be read is actually the latest completed checkpoint. As the previous section discussed, it’s required to guarantee the data of tables & materialized views consistent in one snapshot.

RisingWave makes checkpointing via Chandy–Lamport algorithm. A special kind of message, checkpoint barriers, is generated on streaming source and propagates across the streaming graph to the materialized views (or sink).

To guarantee consistency, RisingWave introduces Chandy-Lamport algorithm as its checkpoint scheme.

In particular, RisingWave periodically (every barrier_interval_ms) repeats the following procedure:

- The meta service initializes a barrier and broadcasts it to all source actors across the streaming engine.

- The barrier messages go through every streaming operator (actors) in the streaming graph.

- For fan-out operators like

Dispatch, the barrier messages are copied to every downstream. - For fan-in operators like

MergeorJoin, the barrier messages are collected and emitted out once collected from all upstreams. - For other operators, the barrier messages flow through the operators and trigger a checkpoint operation on them. Namely, flush the changes into storage.

- For fan-out operators like

- When all dirty states from a compute node are flushed to storage, the compute node sends a finish signal to the meta service.

- After receiving the finish signal from all compute nodes, the meta service tells the storage manager to commit the checkpoint and finishes the checkpoint procedure.

Checkpoint on Storage

As is mentioned before, during checkpointing, every operator writes their changes of this epoch into storage. For the storage layer, these data are still uncommitted, i.e. not persisted to the shared storage. However, the data still need to be visible to that operator locally.

A local shared buffer is introduced to stage these uncommitted write batches. Once the checkpoint barriers have pass through all actors, the storage manager can notify all compute nodes to ‘commit’ their buffered write batches into the shared storage.

Another benefit of shared buffer is that the write batches in a compute node can be compacted into a single SSTable file before uploading, which significantly reduces the number of SSTable files in Layer 0.

Aggregations

We will cover internal implementation of common aggregations in this document.

Frontend

TODO

Expression Framework

TODO

HashAggExecutor

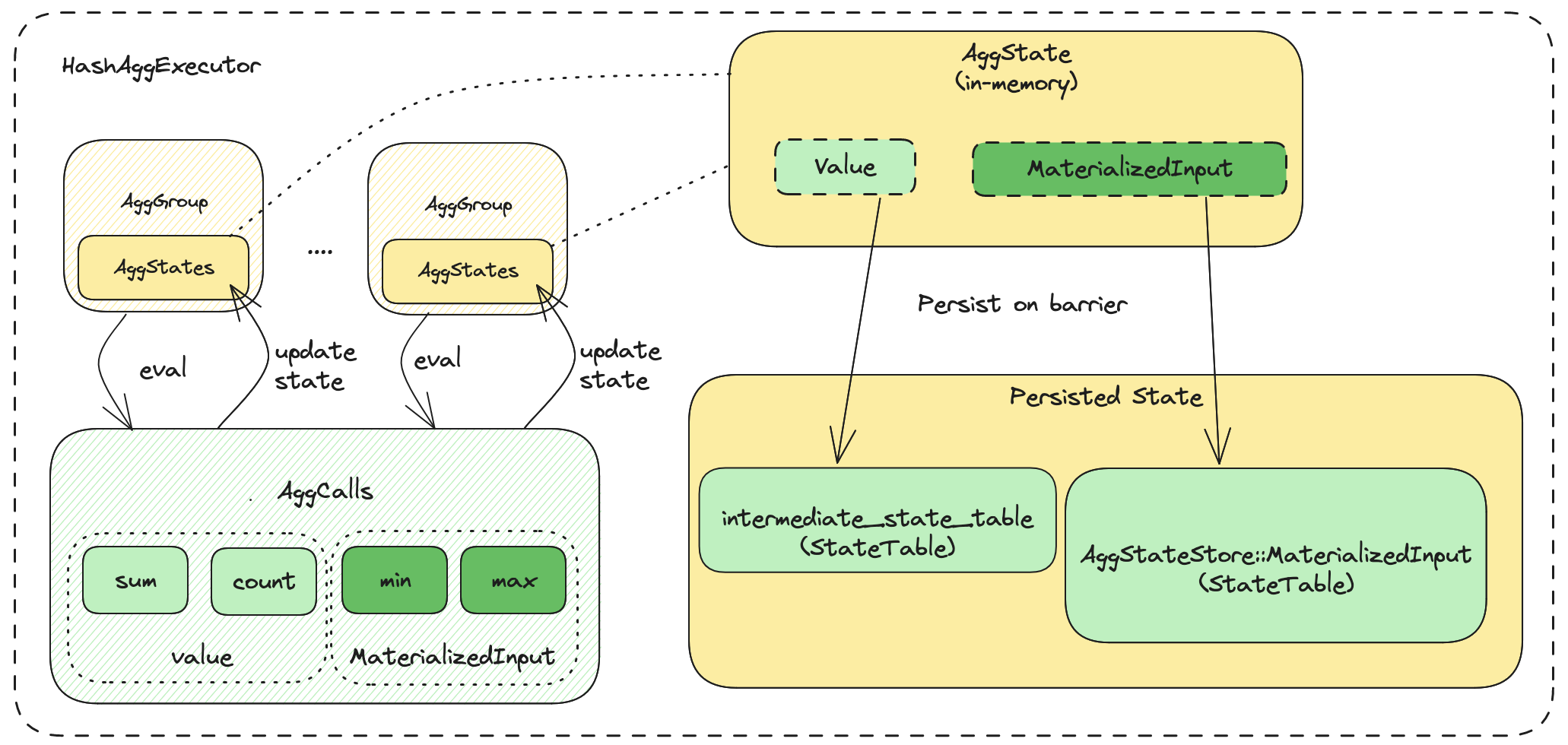

Within the HashAggExecutor, there are 4 main components:

- AggCalls.

- AggState.

- AggGroups.

- Persisted State.

AggCalls are the aggregation calls for the query. For instance SUM(v1), COUNT(v2) has the AggCalls SUM and COUNT.

AggState is the state we use to compute to the result (output) of the aggregation call. Within each aggregation group, it will have an AggState for each AggCall.

AggGroups are created per aggregation group.

For instance with GROUP BY x1, x2, there will be a group for each unique combination of x1 and x2.

Whenever stream chunks come in, the executor will update the aggregation state for each group, per agg call.

On barrier, we will persist the in-memory states.

For value type aggregations, we will persist the state to the intermediate state table.

This state table will store all value aggregations per group on a single row.

For MaterializedInput type aggregations, these require tracking input state. For example, non-append-only min/max.

For each of these aggregations, they have 1 state table (AggStateStorage::MaterializedInput) each. Within the state table, it will store the input state for each group.

Initialization of AggGroups

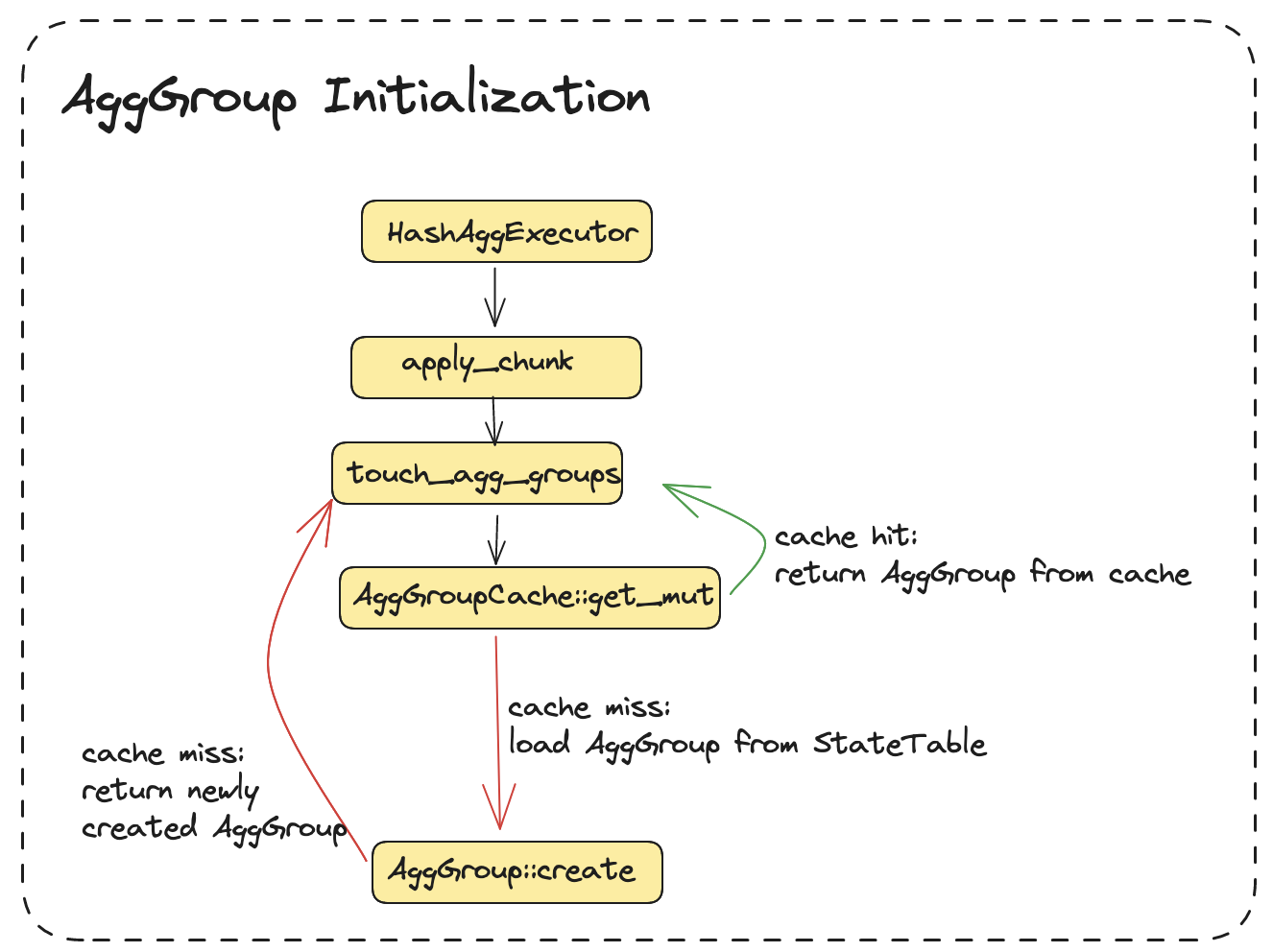

AggGroups are initialized when corresponding aggregation groups are not found in AggGroupCache.

This could be either because the AggGroupCache got evicted,

or its a new group key.

It could take a while to initialize agg groups, hence we cache them in AggGroupCache.

MView on Top of MView

Background

RisingWave supports creating a new materialized view (abbreviated as mview) based on the source and another mview, so users can split their data into multiple layers and use mviews’ chains to connect them.

In detail, we will support the creation of a materialized view whose source is some other mview(s). Please note that there should not be a circular dependency on mviews.

create table t1 (v1 int, deleted boolean);

create materialized view mv1 as select * from t1 where deleted = false;

create materialized view mv2 as select sum(v1) as sum_v1 from mv1;

create materialized view mv3 as select count(v1) as count_v1 from mv1;

Design

Broadcast operator

In physical representation, we introduce a dispatcher operator type, Broadcast. Broadcast dispatcher, as its name indicates, will dispatch every message to multiple downstreams. To simplify our design, we can assume that every MViewOperator has a Broadcast output, with zero or more downstreams.

Create new mview online

Assume that we already have a materialized view mv1, and we want to create a new materialized view mv2 based on mv1. This is equivalent to a configuration change to Broadcast dispatcher. Before the real change happens, we have to apply the snapshot of mv1 to mv2 first. Therefore, we introduce another operator named Chain.

The Chain operator has two inputs. The first one will be a batch query, denoted by the blue patterns in the figure below, which is a finite append-only stream (the snapshot of historical data in the base mview). The second one is its original input, an infinite stream, denoted by the red patterns.

The full process of creation is:

- The frontend parses the query and sends the plan to StreamManager.

- StreamManager creates the new actors.

- StreamManager chooses a change epoch e1, pins a snapshot of mv1 at e1, and sends a barrier with e1 and change info.

- The broadcast operator receives the barrier, then creates a SnapshotStream of mv1 with e1, and creates a Chain operator, then connects them all. (only changes in the memory).

- The broadcast operator sends a normal barrier e1 to all downstreams, and continues.

- The Chain operator consumes all messages from snapshot and receives EOF, then consumes buffered messages from upstream.

- StreamManager discovered that mv2 has almost caught up with the progress of mv1, and the creation success.

Drop mview online

Assume that we already have three materialized views mv1, mv2, and mv3. mv2 and mv3 are on top of mv1, so mv1 is not allowed to be dropped.

The full process of drop mv3 is:

- The frontend parses the query and sends the plan to StreamManager.

- StreamManager chooses a change epoch e1, and sends a barrier with e1 and change info.

- The broadcast operator sends a normal barrier e1 to all downstreams.

- The broadcast operator removes the dropped output from its outputs, and continues.

- StreamManager discovered that mv3 has the epoch e1, then drops extra fragments physically.

Backfill

Backfill is used by various components of our system to merge historical data and realtime data stream.

There are many variants to it, and we will discuss them in the following sections.

Table of Contents

Backfilling 101

Motivation

When creating a Materialized View on top of another one, we need to fetch all the historical data, only then we can start processing the realtime updates, and applying them to the stream.

However, in the process of doing so, we need to either:

- Buffer all the updates.

- Block the upstream from sending updates (i.e. pause the entire stream graph).

For the first option, it is not feasible to buffer all the updates, when historical data takes while to process. If we buffer all the updates it can cause OOM.

For the second option, it is also not feasible, as we are blocking the entire stream graph whenever we create a new materialized view, until processing historical data is done.

So we need a way to merge historical data and realtime updates, without blocking the upstream. This can be done by Backfill.

How it works

Backfilling is the process of merging historical data and update stream into one.

Consider the following example.

We have the following table with 1M records:

CREATE TABLE t (

id INT PRIMARY KEY,

name VARCHAR

);

Then we create a materialized view from that table:

CREATE MATERIALIZED VIEW mv AS SELECT * FROM t;

In one epoch B, we read the historical data from t up to row 2, from data in the previous epoch A:

| op | id | name |

|---|---|---|

| + | 1 | a |

| + | 2 | b |

Note that the

opcolumn is the operation type, and+meansinsert.We use

insertsince all the historical data are just inserts to the downstream materialized view.

In that same epoch B, suppose there are some updates to the table t due to DML statements being ran:

| op | id | name |

|---|---|---|

| + | 4 | d |

| - | 1 | a |

| .. | .. | .. |

| - | 99 | zzz |

| + | 100 | zzzz |

The same updates will then be sent propagated mv in epoch B.

Since we backfilled the historical data up to row 2, we only need to apply the updates up to row 2.

So downstream will just receive:

-

The historical data up to row

2.op id name + 1 a + 2 b -

The realtime delta stream up to row

2:op id name - 1 a

So we didn’t need to buffer all the updates, until historical data is completely processed. Instead at each epoch, we just read some historical data, and apply any relevant updates on them.

To ensure we always make progress, we will keep track of the last row we backfilled to, and continue from after that row in the next epoch.

In the following sections, we will delve into specific types of backfill.

References

RFC: Use Backfill To Let Mv On Mv Stream Again

NoShuffle Backfill

This section will mainly discuss the implementation of NoShuffle Backfill, as the concept is as described above.

This backfill executor is the precursor to arrangement backfill.

It is used to backfill RisingWave Materialized Views, Tables and Indexes.

NoShuffleBackfill executor receives upstream updates via a NoShuffleDispatcher.

For historical data, it uses batch scan to read snapshot data using the StorageTable interface.

Using the NoShuffleDispatcher means that the actors in the scan fragment

need to be scheduled to the same parallel unit

as the actors in the dispatcher.

This also means that the parallelism of NoShuffleBackfill is coupled with

its upstream fragment.

When scaling, we can’t scale backfill independently as a result, only together with the upstream fragment.

Another issue with NoShuffleBackfill is the way backfill state is stored.

Backfill executor stores a single latest state across all vnodes it has:

| vnode | pk_offset |

|---|---|

| 0 | 5 |

| 1 | 5 |

| 2 | 5 |

This means if we scale in or out, the state may not be accurate. This is because the state if partitioned per vnode could be:

| vnode | pk_offset |

|---|---|

| 0 | 1 |

| 1 | 2 |

| 2 | 5 |

If we ran some scaling operations, and got:

| vnode | pk_offset |

|---|---|

| 3 | 0 |

| 1 | 5 |

| 2 | 5 |

It would be unclear which pk_offset to resume from.

In the next iteration of backfill, we have ArrangementBackfill which solves these issues.

Arrangement Backfill

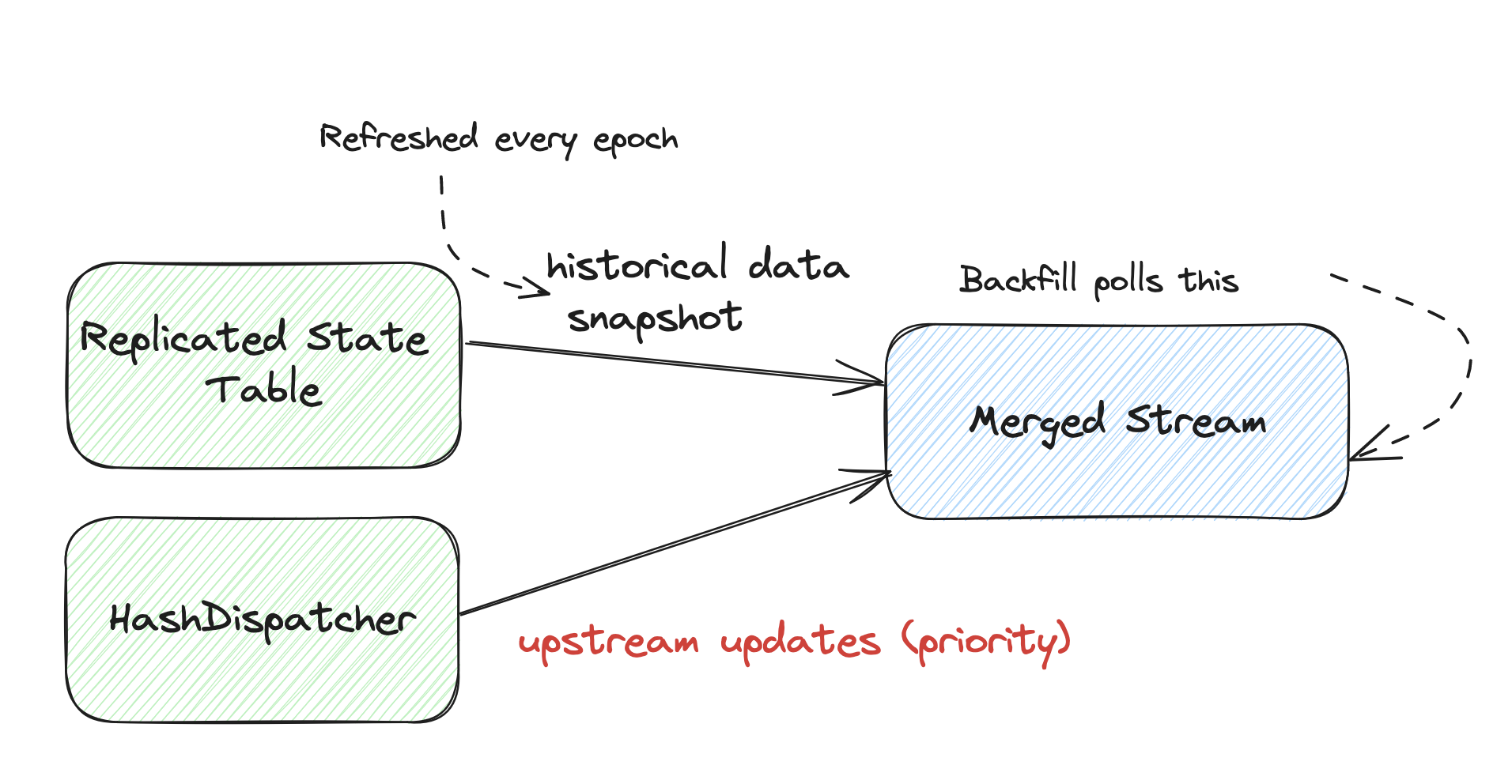

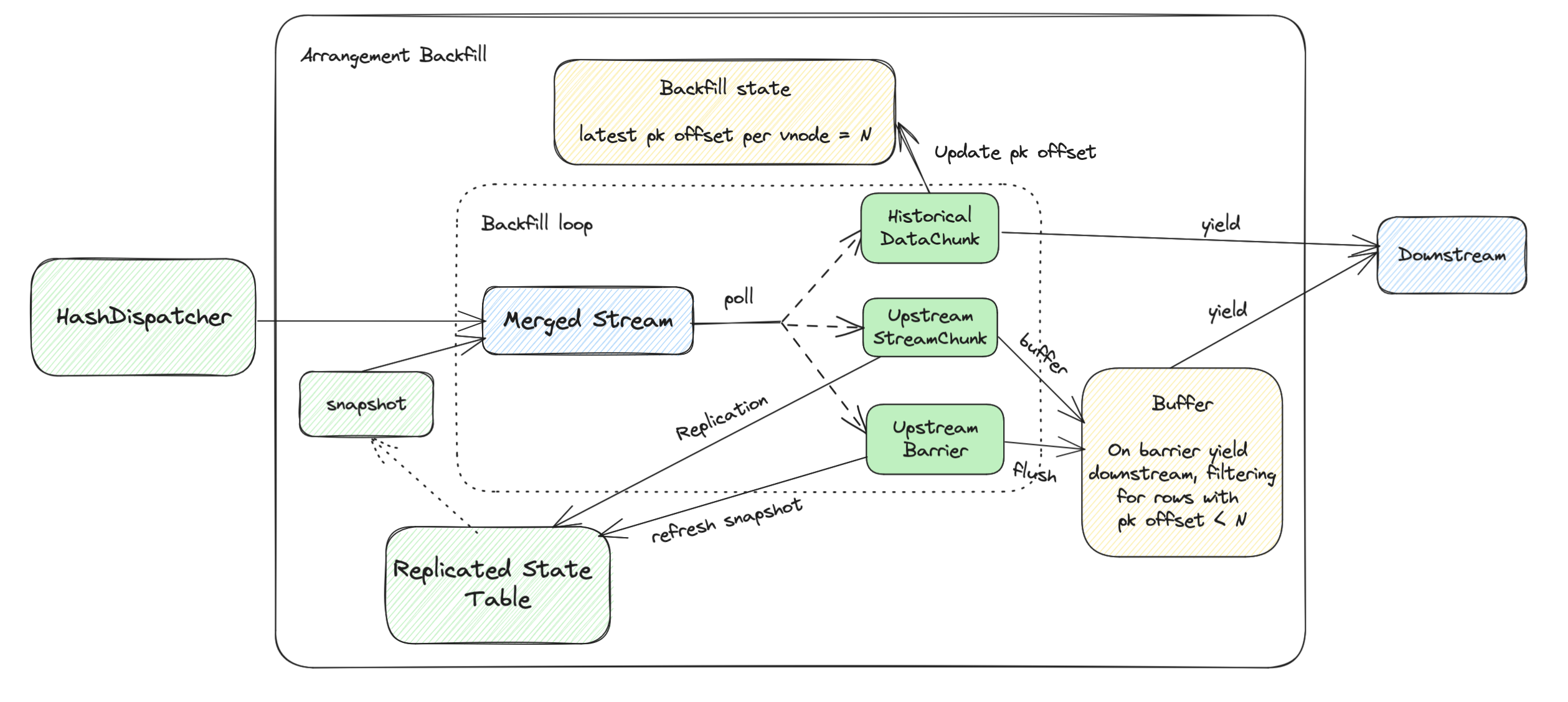

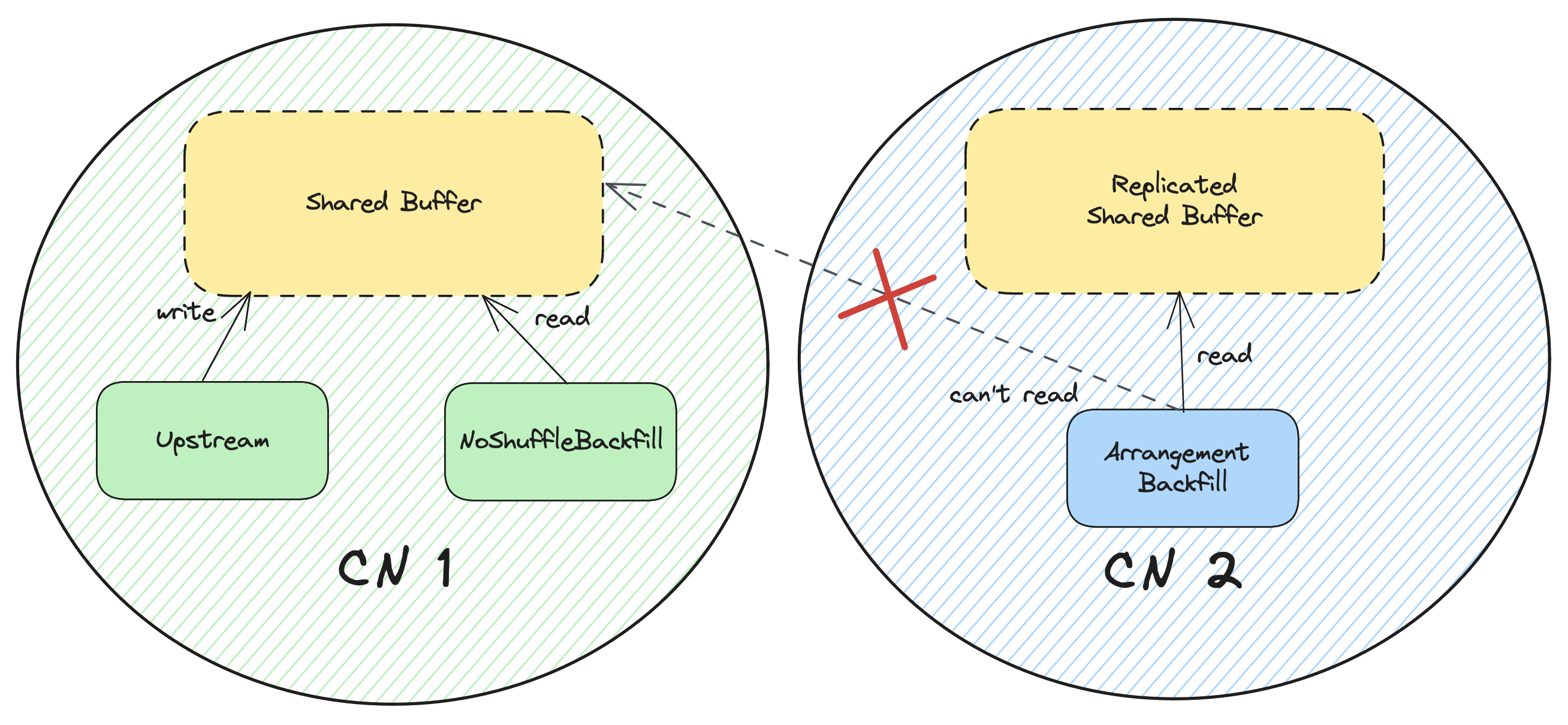

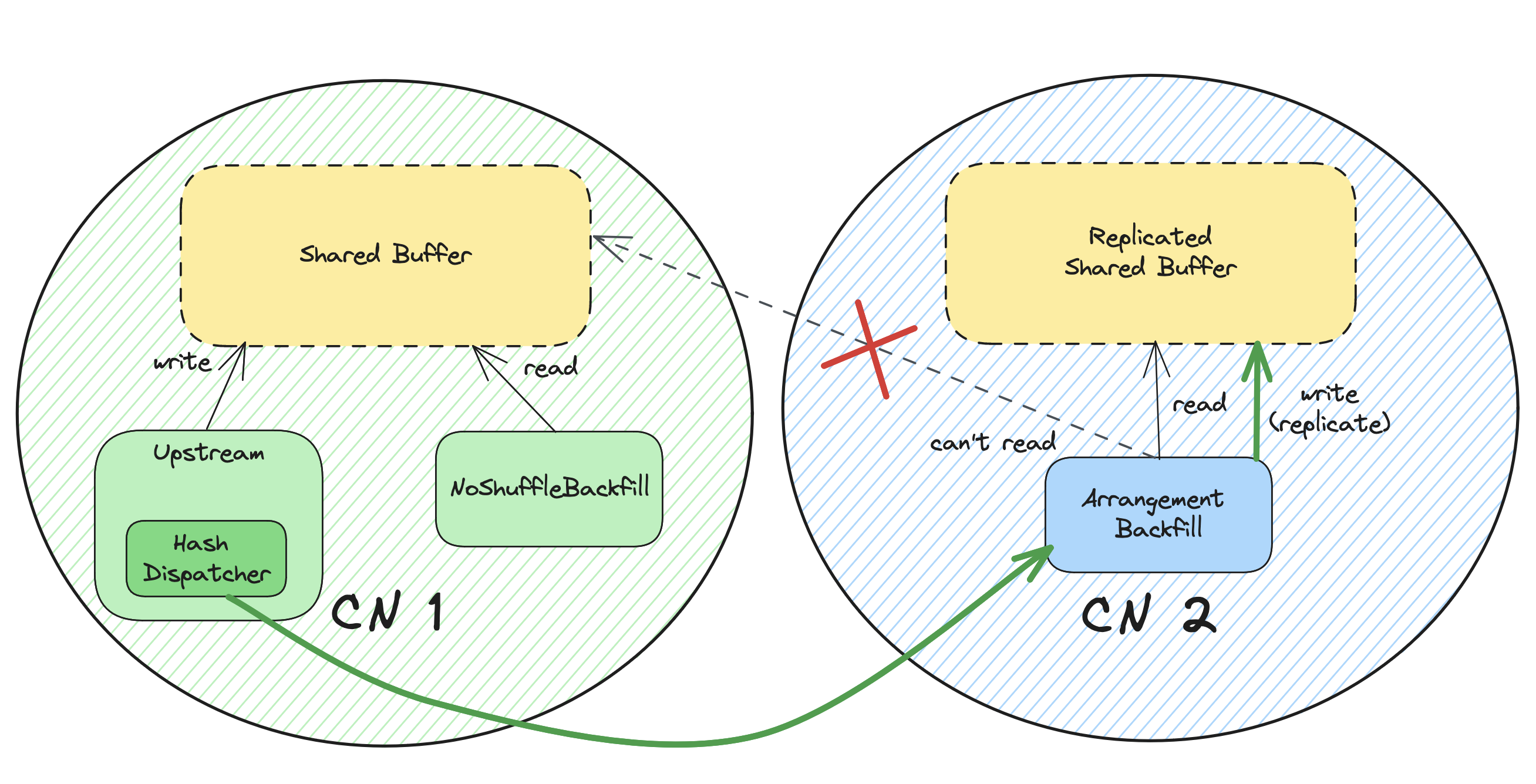

ArrangementBackfill is the next iteration of NoShuffleBackfill executor.

Similarly, it is used to backfill RisingWave Materialized Views, Tables and Indexes.

The main goal of ArrangementBackfill is to scale its parallelism independently of the upstream fragment.

This is done with replication.

Differences with NoShuffleBackfill

First, let’s discuss the key differences in components.

| Side | NoShuffleBackfill | ArrangementBackfill |

|---|---|---|

| Upstream | NoShuffleDispatcher | HashDispatcher |

| Historical | Scan on StorageTable | Scan on Replicated StateTable |

For the upstream part, it’s pretty straightforward.

We use a HashDispatcher to dispatch updates to the backfill executor,

since ArrangementBackfill can be on a different parallel unit than its upstream fragment.

For the historical part,

we use a Replicated StateTable to read historical data, and replicate the shared buffer.

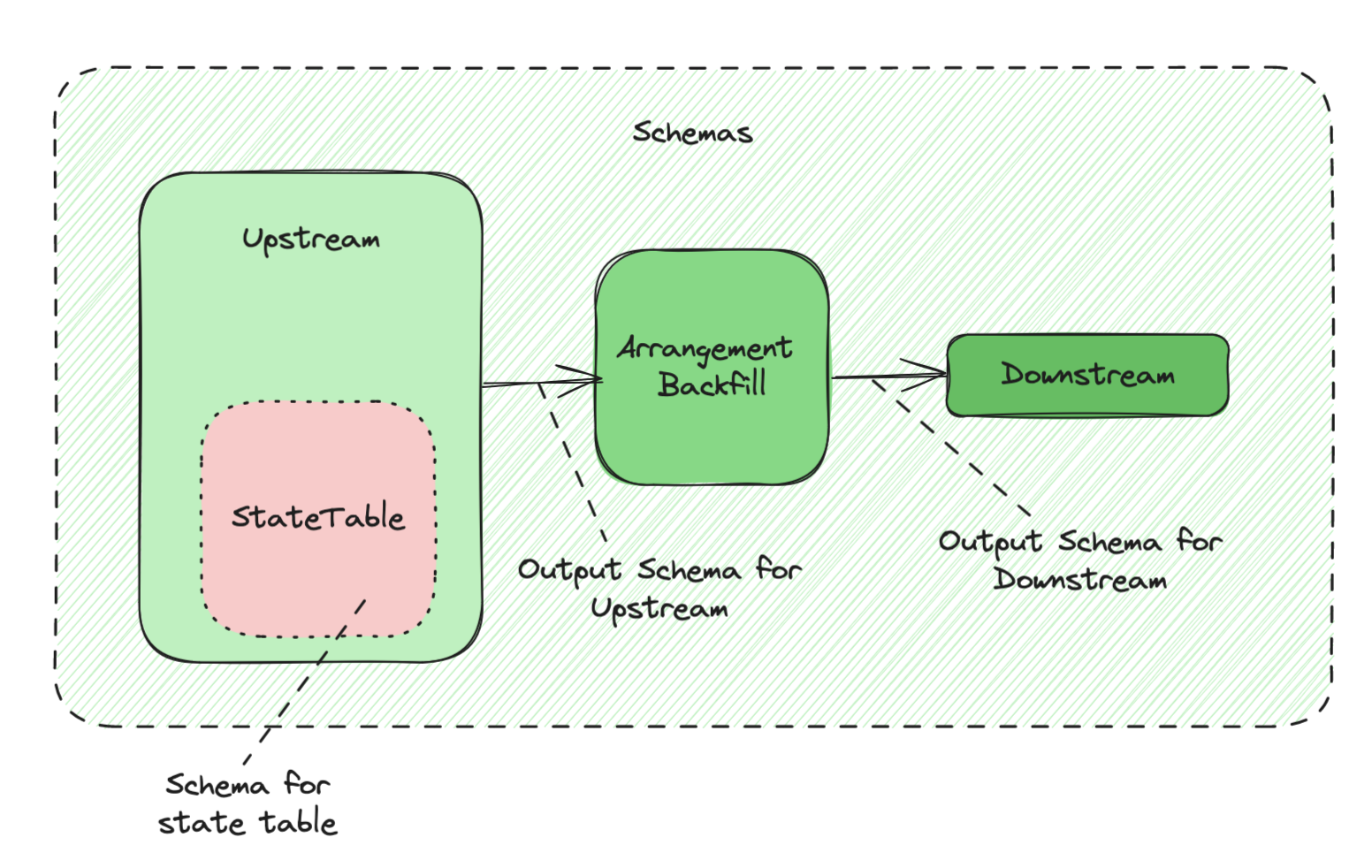

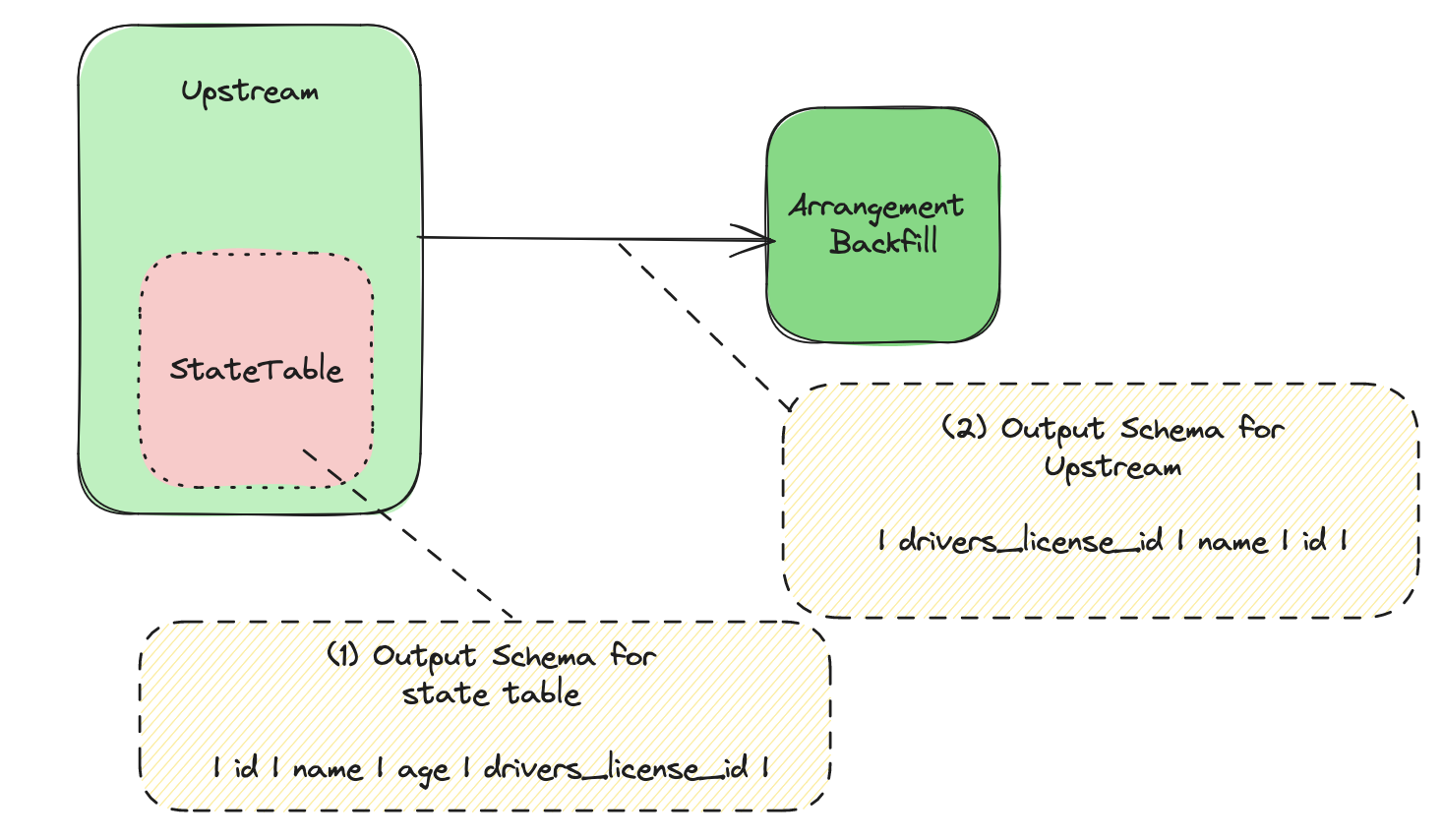

Arrangement Backfill Frontend

Arrangement Backfill is constructed in the following phases in the optimizer:

(1) LogicalScan -> (2) StreamTableScan -> (3) PbStreamScan (MergeNode, ..)

From 2 to 3, we will compute the output_indices (A) from upstream to backfill,

and the output_indices (B) from backfill to downstream.

(B) will always be a subset of (A). The full PK is needed for backfilling, but it is not always needed after that.

For example, consider the following queries.

create table t1(id bigint primary key, i bigint);

create materialized view mv1 as select id, i from t1 order by id, i;

create materialized view mv2 as select id from mv1;

mv1 has the following plan:

StreamMaterialize { columns: [id, i], stream_key: [id], pk_columns: [id, i], pk_conflict: NoCheck }

└─StreamTableScan { table: t1, columns: [id, i] }

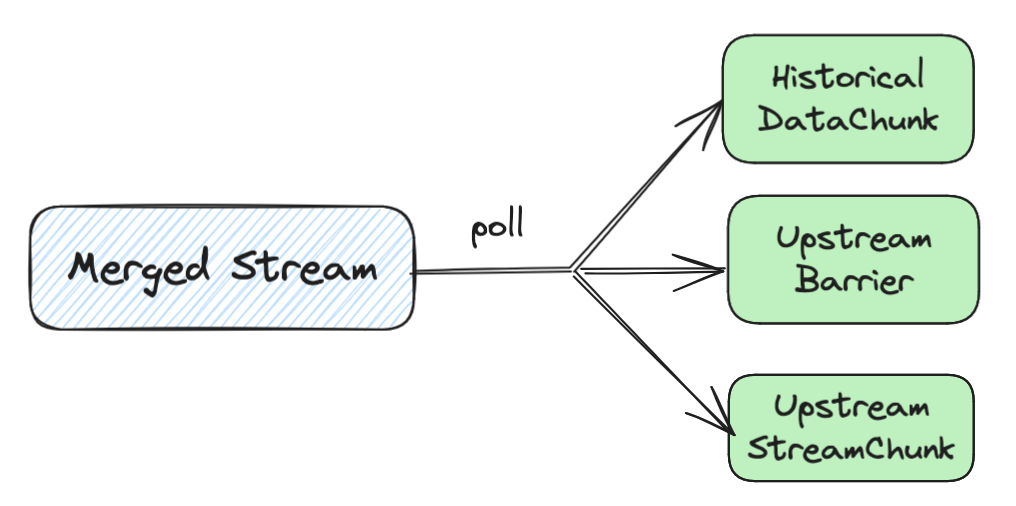

mv2 has the following plan: